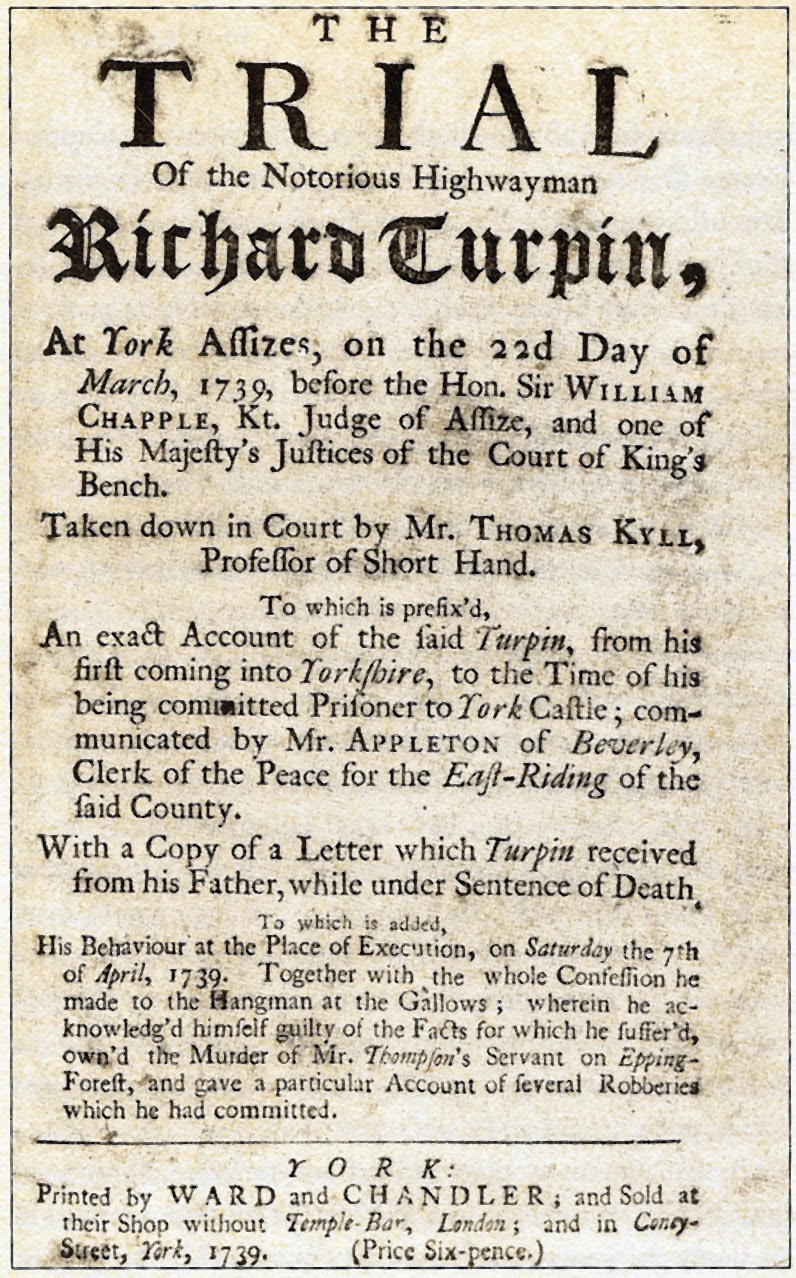

The Perfume Maker, Rudolf Ernst (1854-1932) (Wikicommons)

It has long been known that we vary not only in our sensitivity to different smells but also in our preferences for them—the degree to which they seem pleasant or unpleasant. This variability often contains a large genetic component (Gross-Isseroff et al., 1992; Karstensen and Tommerup, 2012; Keller et al., 2007; Keller et al., 2012; Weiss et al., 2011). In the case of one odor, a single gene explains over 96% of the variability in smell sensitivity (Jaegar et al., 2013). A twin study has similarly found two odorants to be 78% and 73% heritable (Gross-Isseroff, 1992). This hardwiring is selective, however, because sensitivity to other odors can show little or no heritable variation (Hubert et al., 1980). There is also selective hardwiring in smell preferences. Different individuals will perceive androstenone, for instance, as offensive ('sweaty, urinous'), pleasant ('sweet, floral'), or odorless (Keller et al., 2007). It seems that selection has produced specific algorithms in the human brain for specific smells and that these algorithms can differ from one individual to the next (Keller et al., 2007; Knaapila et al., 2012).

This genetic variability exists between men and women, and also between age groups (Keller et al., 2012). Does it also exist between different human populations? The sense of smell does seem to matter more in some than in others, particularly hunter-gatherers:

People pay attention to smells when they are important to their daily lives and are not just part of the sensory and emotional background. This is certainly the case with the Umeda of New Guinea: in a tropical rainforest scent plays as important a role as sight in terms of spatial orientation. The Waanzi in southeast Gabon use odors daily in fishing, hunting, and gathering, thanks to a kind of 'olfactory apprenticeship' in family life and rituals of initiation. In Senegal, the Ndut are even more skillful: they are able to distinguish the odors of the different parts of plants and they are able to give a name to these odors. In our society, of course, most of us are incapable of this.(Candau, 2004)

For hunter-gatherers farther away from the tropics, the sense of smell matters less because the land supports a lower diversity of plant species and has less plant life altogether per unit of land area. Parallel to this north-south trend, more food comes from hunting of game animals and less from gathering of plant items. The end point is Arctic tundra, where opportunities for gathering are limited even in summer and where most food takes the form of meat. There, the senses of sight and sound matter more, being of greater value for long-range detection and tracking of game animals.

Candau (2004) sees these differences between human groups as evidence for "cultural influences" rather than "genetic inheritance." The two are not mutually exclusive: culture itself can select for some heritable abilities over others. On this point, it may be significant that different human groups continue to show differences in smell sensitivity and preference long after their ancestors had moved to very different environments. Thus, in a study from New York City, Euro-American and African American participants were exposed to a wide range of odors. It was found that the two groups differed in their pleasantness rating of 18 of the 134 stimuli, generally floral or vegetative odors. Moreover in 14 of the 18, the African Americans were the ones who responded more positively (Keller et al., 2012).

In addition, the African Americans responded more readily to aromatic metabolites of the male hormone testosterone, i.e., androstadienone and androstenone. The authors note: "This is consistent with the finding from the National Geographic Smell Survey, which found that African respondents were more sensitive to androstenone than American respondents. This difference is undoubtedly at least partially caused by the fact that the functional RT variant of OR7D4 is more common in African-Americans than in Caucasians" (Keller et al., 2012).

Gene-culture coevolution during historic times

This coevolution did not stop with hunter-gatherers. Beginning 10,000 years ago, some of them became farmers and that change set off a lot of other changes: population growth, land ownership, creation of a class system, social inequality on a much greater scale, year-round settlement in villages and then in towns and cities ... And on and on. We entered new environments—not natural ones of climate and vegetation, but rather human-made ones.

These environments offered us new olfactory stimuli: salves, perfumes, incense, scented oils, aromatic baths ... Havlicek and Roberts (2013) argue that our sense of smell coevolved with human-made fragrances and that this coevolution went on for the longest in the Middle East and South Asia, where the use of perfumes is attested as early as the fourth millennium B.C. (Wikipedia, 2015). A sort of positive feedback then developed between use of these fragrances and praise of them in prose, song, and poetry, the two reinforcing each other and thereby strengthening the pressure of selection. This may be seen in the Bible:

The Hebrew Song of Songs furnishes a typical example of a very beautiful Eastern love-poem in which the importance of the appeal to the sense of smell is throughout emphasized. There are in this short poem as many as twenty-four fairly definite references to odors,—personal odors, perfumes, and flowers,—while numerous other references to flowers, etc., seem to point to olfactory associations. Both the lover and his sweetheart express pleasure in each other's personal odor.

"My beloved is unto me," she sings, "as a bag of myrrh

That lieth between my breasts;My beloved is unto me as a cluster of henna flowers

In the vineyard of En-gedi."

And again: "His cheeks are as a bed of spices [or balsam], as banks of sweet herbs." While of her he says: "The smell of thy breath [or nose] is like apples." (Ellis, 1897-1928)

In the 9th century the Arab chemist Al-Kindi wrote the Book of the Chemistry of Perfume and Distillations, which contained more than a hundred recipes for fragrant oils, salves, and aromatic waters (Wikipedia, 2015). Today, the names of our chief perfumes are often of Arabic or Persian origin: civet, musk, ambergris, attar, camphor …

Finally, the use of perfumes, like kissing and cosmetics in general, moved the center of sexual interest away from the genitals and toward the face, thereby creating a second channel of arousal:

[...] the focus of olfactory attractiveness has been displaced. The centre of olfactory attractiveness is not, as usually among animals, in the sexual region, but is transferred to the upper part of the body. In this respect the sexual olfactory allurement in man resembles what we find in the sphere of vision, for neither the sexual organs of man nor of woman are usually beautiful in the eyes of the opposite sex, and their exhibition is not among us regarded as a necessary stage in courtship. The odor of the body, like its beauty, in so far as it can be regarded as a possible sexual allurement, has in the course of development been transferred to the upper parts. The careful concealment of the sexual region has doubtless favored this transfer. (Ellis, 1897-1928)

Differences between human populations?

To recapitulate, we humans vary a lot in the degree to which we have been exposed to perfumes and to a perfume-friendly culture, a possible analogy being the degree to which we have been exposed to alcoholic beverages. There may thus have been selection against individuals whose own smell preferences or body chemistry failed to match the perfumes available, this selection being not only stronger in some populations but also qualitatively different:

[...] individual communities vary considerably in the substances they employ for perfume production (in most of the speculations below we deliberately ignore recent trends such as technological advancement in global transfer of goods and production of synthetic chemicals used in perfumery: these phenomena appeared only very recently and one might not expect their immediate effect on biological evolution which operates on a much longer time scale). The absence of a specific ingredient in the perfumes of a particular community could be due to the following reasons: (1) the source of the odour is unavailable in the area and is not traded from neighbours. For example, we know that aromatic plants were an important commodity in trading networks in Ancient Egypt or Greece, but some of the scents routinely employed in that era in India were rare or absent in Mediterranean cultures. (2) The community is constrained by a technology. Some of the aromas can be extracted only using a specific technology which might not be available for or discovered by the particular community. In ancient Greece, for instance, ethanol distillation was not used and perfumers instead used mechanic extraction or enfleurage (Brun 2000). (3) Particular scents or their source (e.g. a particular plant) are believed to be inappropriate for body adornment. Such beliefs might stem from religious considerations.

[...] considering that only some scent ingredients will complement particular body odours (i.e. particular genotypes) and that a particular community employs only a restricted variety of scents for perfuming, it is plausible that some individuals may not be able to select a perfume which complements their body odour and may therefore suffer a social disadvantage. In the long run, the frequency of genotypes of such individuals would decrease in the particular community. (Havlicek and Roberts, 2013)

It is disappointing that Havlicek and Roberts do not develop this argument further with plausible evidence for such gene-culture coevolution. For instance, Hall et al. (1968) discussed how smell and touch hold greater importance for Arabs than for Americans. This point has likewise been remarked upon with respect to the Gulf countries:

The importance of good smell in Qatari homes is inherent in the requirement of cleanness and purity (taharah) in Islam, both physical and spiritual (Sobh and Belk, 2010). Purity, cleanness, and good smell are central to Muslims everywhere in the world, but the obsession with perfuming bodies and homes is something of a fetish in Gulf countries and is very prominent in Qatar. (Sobh and Belk, 2011)

This heightened smell sensitivity is all the more striking because plant life is less abundant and less diverse in the Middle East, certainly in comparison with the tropics. It seems unlikely, then, that it had been acquired during the hunter-gatherer stage of cultural evolution, being instead a later development, possibly through coevolution with the development of perfumes in historic times.

References

Candau, J. (2004). The olfactory experience: constants and cultural variables, Water Science & Technology, 49, 11-17.

https://halshs.archives-ouvertes.fr/halshs-00130924/Ellis, H. (1897-1928). Studies in the Psychology of Sex, vol. IV, Appendix A. The origins of the kiss.

https://www.gutenberg.org/files/13613/13613-h/13613-h.htmGross-Isseroff, R., D. Ophir, A. Bartana, H. Voet, and D. Lancet. (1992). Evidence for genetic determination in human twins of olfactory thresholds for a standard odorant, Neuroscience Letters, 141, 115-118.

http://www.sciencedirect.com/science/article/pii/030439409290347AHall, E.T., R.L. Birdwhistell, B. Bock, P. Bohannan, A.R. Diebold, Jr., M. Durbin, M.S. Edmonson, J.L. Fischer, D. Hymes, S.T. Kimball, W. La Barre, F. Lynch, J.E. McClellan, D.S. Marshall, G.B. Milner, H.B. Sarles, G. L Trager, and A.P. Vayda (1968). Proxemics, Current Anthropology, 9, 83-108

http://www.jstor.org/stable/2740724?seq=1#page_scan_tab_contentsHavlícek, J., and S.C. Roberts (2013). The Perfume-Body Odour Complex: An Insightful Model for Culture-Gene Coevolution? Chemical Signals in Vertebrates, 12, 185-195.

http://www.scraigroberts.com/uploads/1/5/0/4/15042548/2013_csiv_perfume.pdfHubert, H.B., R.R. Fabsitz, M. Feinleib, and K.S. Brown. (1980). Olfactory sensitivity in humans: genetic versus environmental control, Science, 208, 607-609.

http://www.sciencemag.org/content/208/4444/607.shortJaeger, S.R., J.F. McRae, C.M. Bava, M.K. Beresford, D. Hunter, Y. Jia et al. (2013). A Mendelian Trait for Olfactory Sensitivity Affects Odor Experience and Food Selection, Current Biology, 23, 1601 - 1605

http://www.cell.com/current-biology/abstract/S0960-9822(13)00853-1?_returnURL=http%3A%2F%2Flinkinghub.elsevier.com%2Fretrieve%2Fpii%2FS0960982213008531%3Fshowall%3DtrueKnaapila, A., G. Zhu, S.E. Medland, C.J. Wysocki, G.W. Montgomery, N.G. Martin, M.J. Wright, D.R. Reed. (2012). A genome-wide study on the perception of the odorants androstenone and galaxolide, Chemical Senses, 37, 541-552.

http://www.researchgate.net/profile/Danielle_Reed2/publication/221859158_A_genome-wide_study_on_the_perception_of_the_odorants_androstenone_and_galaxolide/links/02e7e52b367564a573000000.pdfKarstensen, H.G. and N. Tommerup. (2012). Isolated and syndromic forms of congenital anosmia, Clinical Genetics, 81, 210-215.

Keller, A., M. Hempstead, I.A. Gomez, A.N. Gilbert and L.B Vosshall. (2012). An olfactory demography of a diverse metropolitan population, BMC Neuroscience, 13, 122

http://www.biomedcentral.com/1471-2202/13/122/Keller, A., H. Zhuang, Q. Chi, L.B. Vosshall, and H. Matsunami. (2007). Genetic variation in a human odorant receptor alters odour perception, Nature, 449, 468-472

http://vosshall.rockefeller.edu/reprints/KellerMatsunamiNature07.pdfSobh, R. and R. Belk. (2011). Domains of privacy and hospitality in Arab Gulf homes, Journal of Islamic Marketing, 2, 125-137

http://www.researchgate.net/profile/Rana_Sobh/publication/241699027_Domains_of_privacy_and_hospitality_in_Arab_Gulf_homes/links/54a1374b0cf257a63602614b.pdfWeiss, J., M. Pyrski, E. Jacobi, B. Bufe, V. Willnecker, B. Schick, P. Zizzari, S.J. Gossage, C.A. Greer, T. Leinders-Zufall, et al. (2011). Loss-of-function mutations in sodium channel Nav1.7 cause anosmia, Nature, 472, 186-190.

Wikipedia (2015). Perfume

https://en.wikipedia.org/wiki/Perfume

.jpg)

.jpg)